People make meaning together. It happens all the time in chatrooms and private channels. A novel piece of media is shared and is then contextualized by the group. Through conversation, the group annotates media that enters the discussion space. Yet this work is underutilized. NLP tasks rely on annotated data in order to train models. By looping a group's natural sense-making impulses into the annotation pipeline there is the potential to annotate the web in ways that are richer, more meaningful, and more useful. By optimizing communication tools for group sense-making, it is possible to create better LLM training sets.

I often hear that the communication tools people use don't feel right. Wether its for work or for the cultivation of an emergent online community, it seems like something is missing. What if this is because many of these conversations are happening in the wrong place? People love sharing websites, videos and PDFs they're excited about. Rather than talk about a piece of media with just a preview card for reference, the conversation should continue within the media itself, affording rich context and organic, emergent insight.

This is not as crazy at it may seem. There already exist groups that communicate through annotation in embedded conversations. Foster is a writers group which released Google Docs plugin that allows members to solicit feedback directly from the document they're working in. Writers specify the kind of feedback they're looking for, and community members comment directly in the document based on the request.

Similarly, Hypothesis is a tool for annotating the web with friends. The Hypothesis browser plugin enables users to highlight HTML documents and PDFs commenting from the plugin sidebar. User can highlight and annotate with a group or make their annotations visible to anyone using the plugin.

Both these products create an intimate and organic format where discussion happens not in a centralized chat, but embedded within the context of the document.

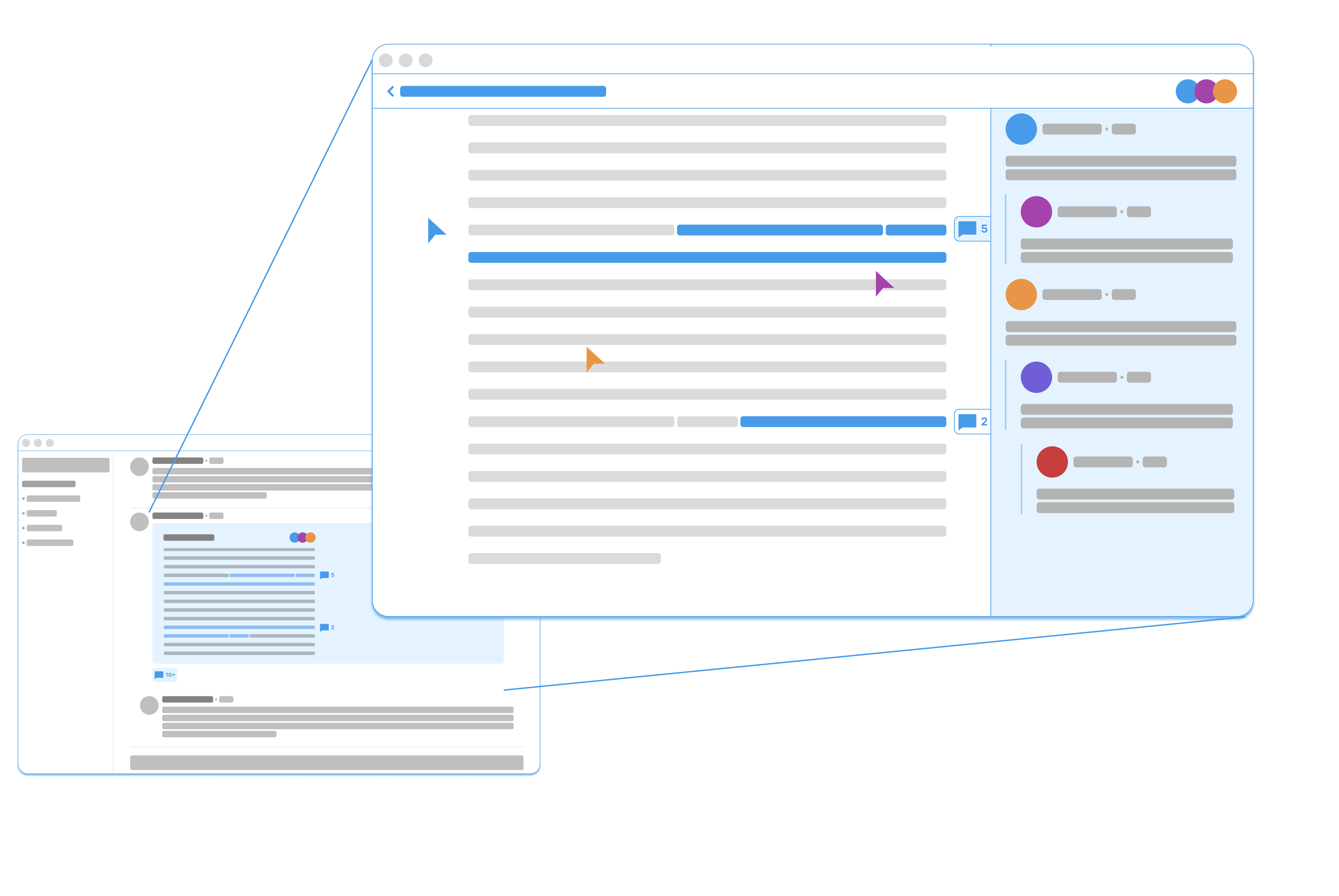

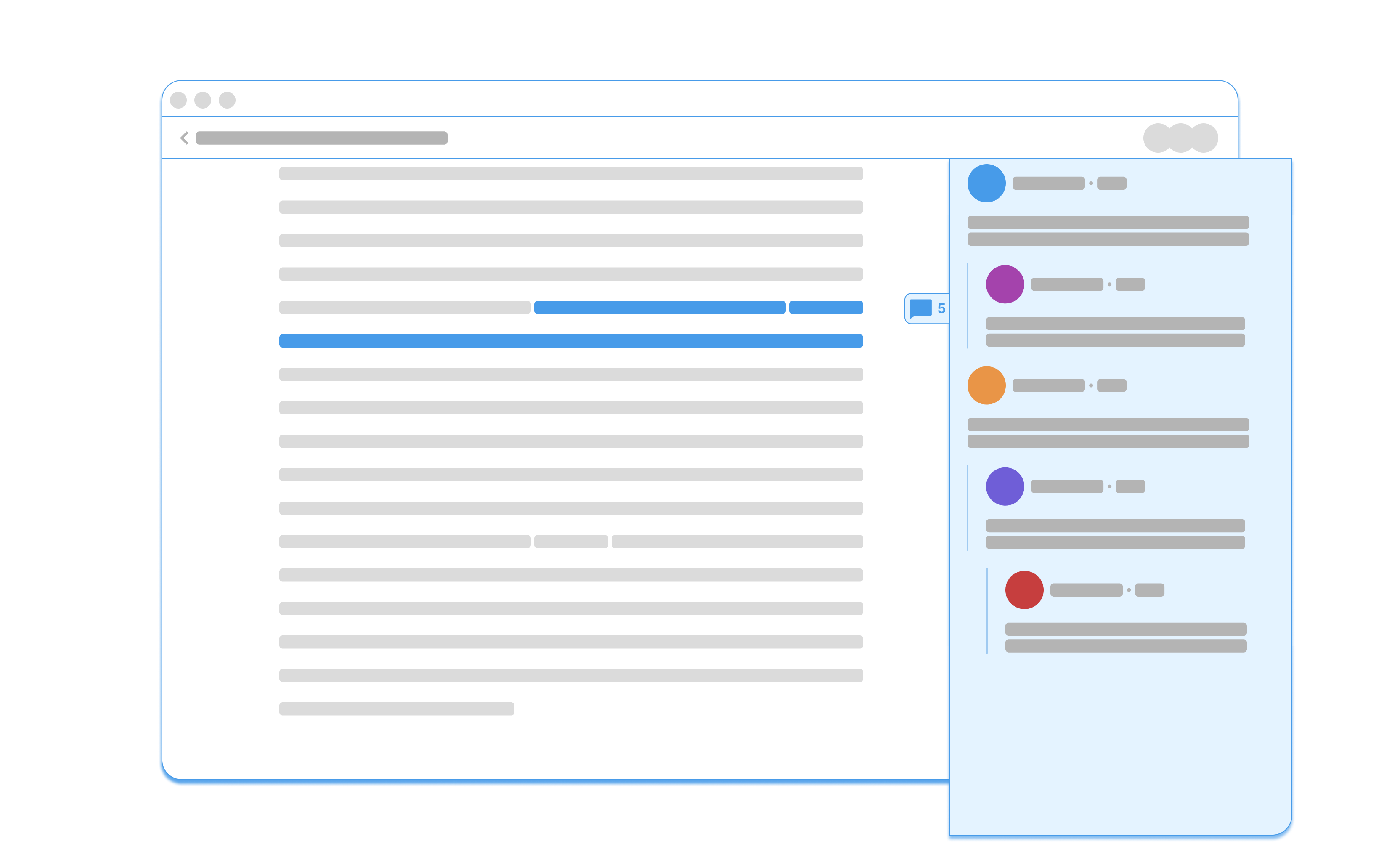

Now imagine if something like this could be spun up anytime a person pastes a link, or shares a document in a group chat. Organic discussion continues uninterrupted within the document. Referencing specific elements of the document in conversation improves sense-making as people draw meaning directly from the document and from the embedded annotations of group members.

This shifts the balance of communication, moving it from referencing media to engaging with it. Conversation happens through highlighting key pieces of information. Threads are initiated by highlighting content. This approach improves the clarity of conversation by situating it in the media being discussed. It quickly orients group members within the parts of the document that are most significant to the group's reading. In short, it gives groups a better set of tools for collective sense-making.

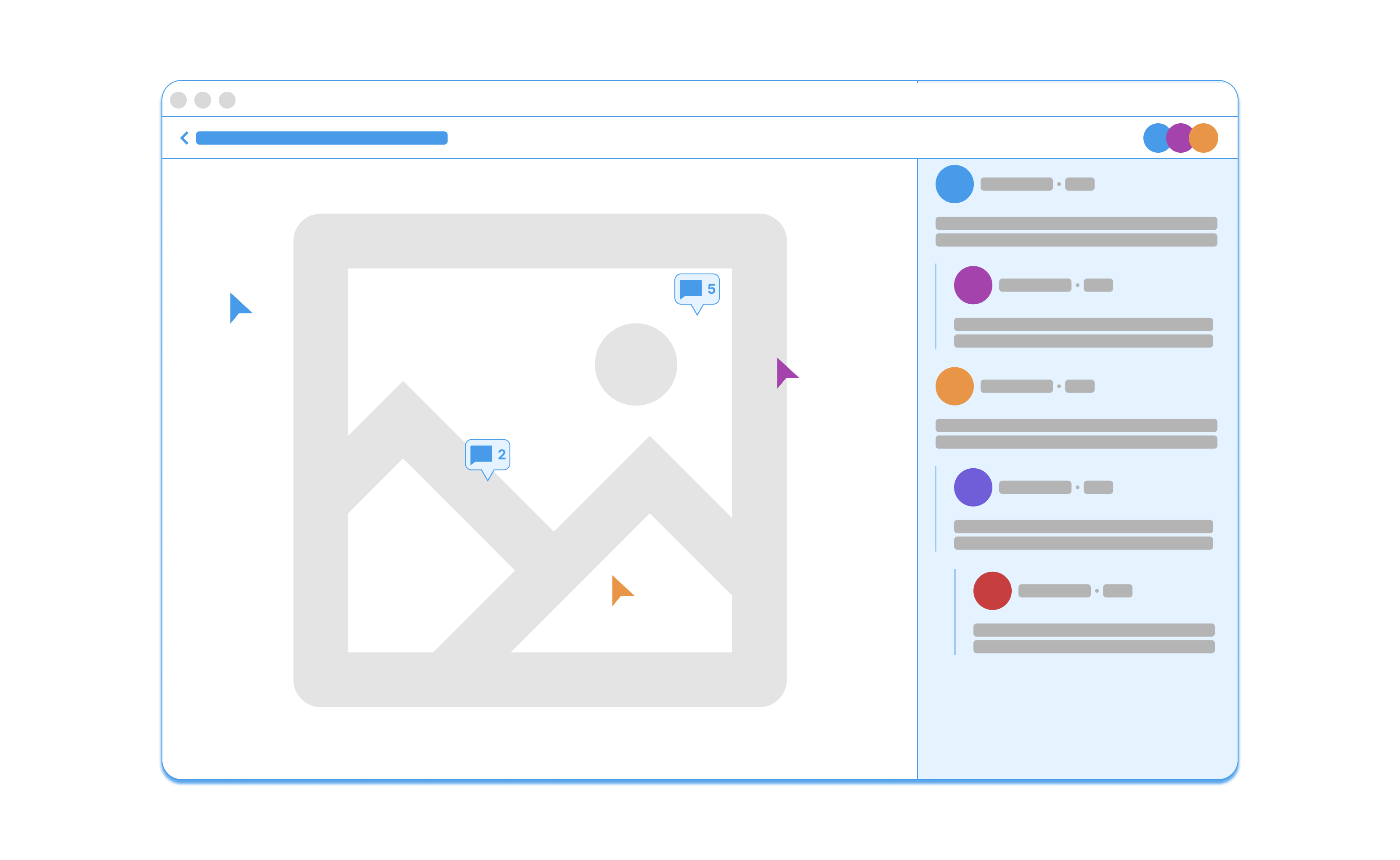

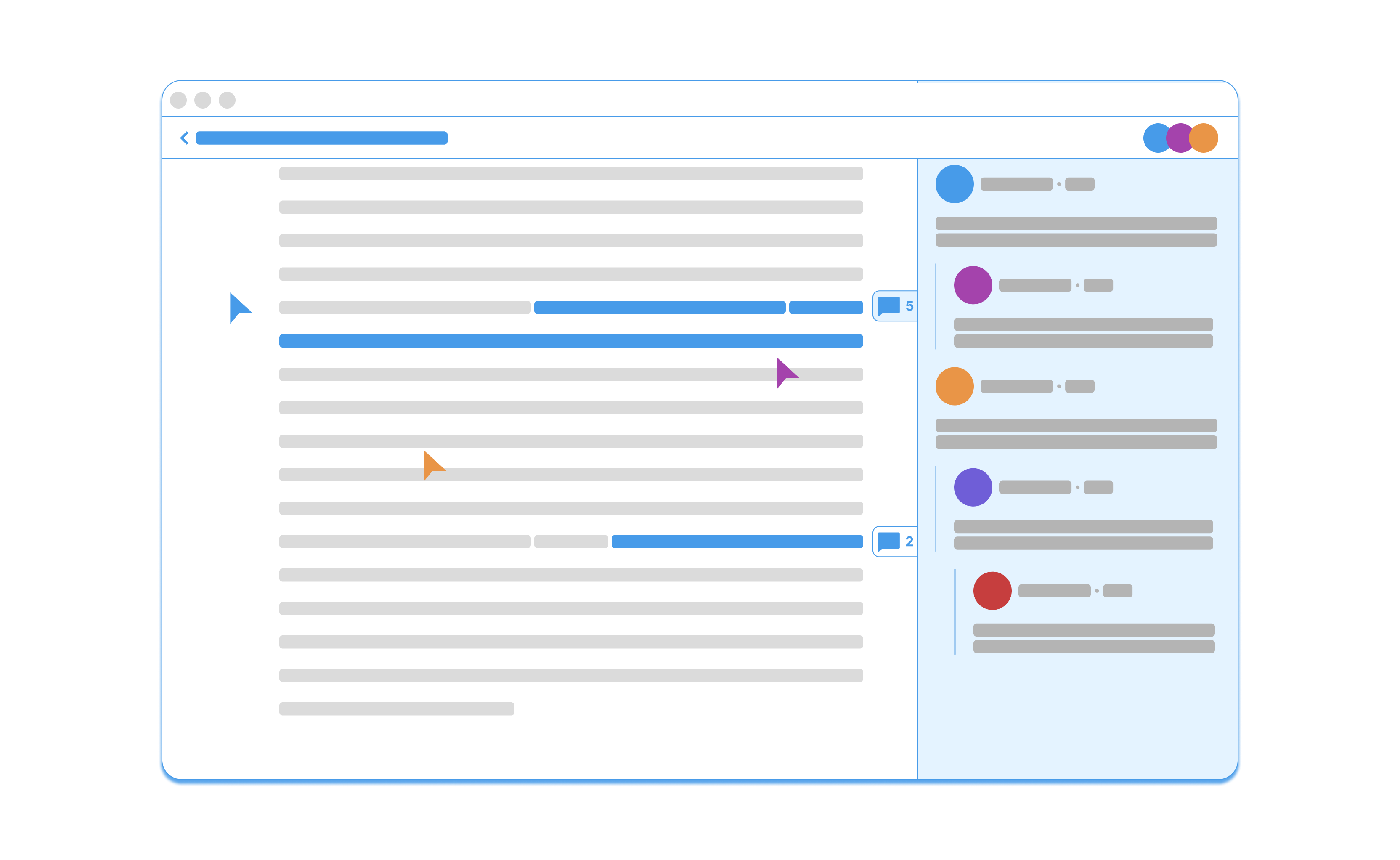

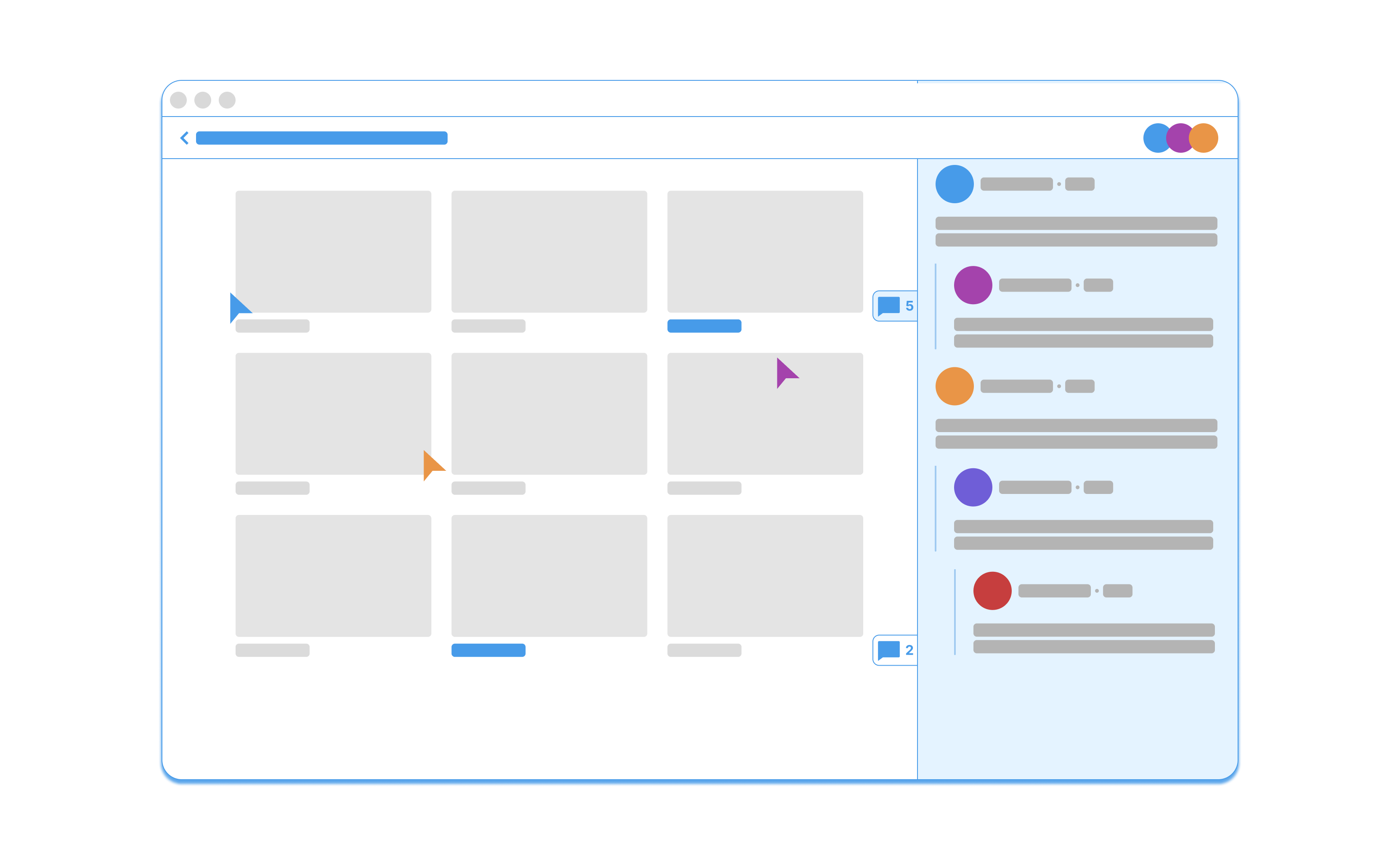

This approach can work across various media types.

Users. can annotate images, commenting directly on what they see. There is also potential to include white-boarding tools such as a pencil, enabling users to visually annotate further.

Users would be able to annotate PDFs, highlighting text and spinning up threads from the highlights.

Users can annotate websites, spinning up a live frame of the url that users can interact with, highlight and comment on.

Importantly, the instance is framed within the context of the group chat, maintaining a cognitive scaffold for the conversation. This contextualizes the documents, orienting the user so that they are looking at the document through the lens of the group. Having a multiplayer view of cursors can also help to reenforce the boundaries of group space.

Data sets are more effective when they contain high quality metadata. But improved metadata is not just useful for improving training sets. A big problem facing group chats is poor indexing of media. A book, or pdf will be shared and and later on difficult to find using search tools.

Communication tools can facilitate the collection and confirmation of metadata by prioritizing this activity. Not only would be nice to be able to spin up a bibliography of all the media mentioned in group chat, it would also improve sense-making. Group recall would be improved, making conversations around documents more portable, and bringing past knowledge creation into closer connection with the present.

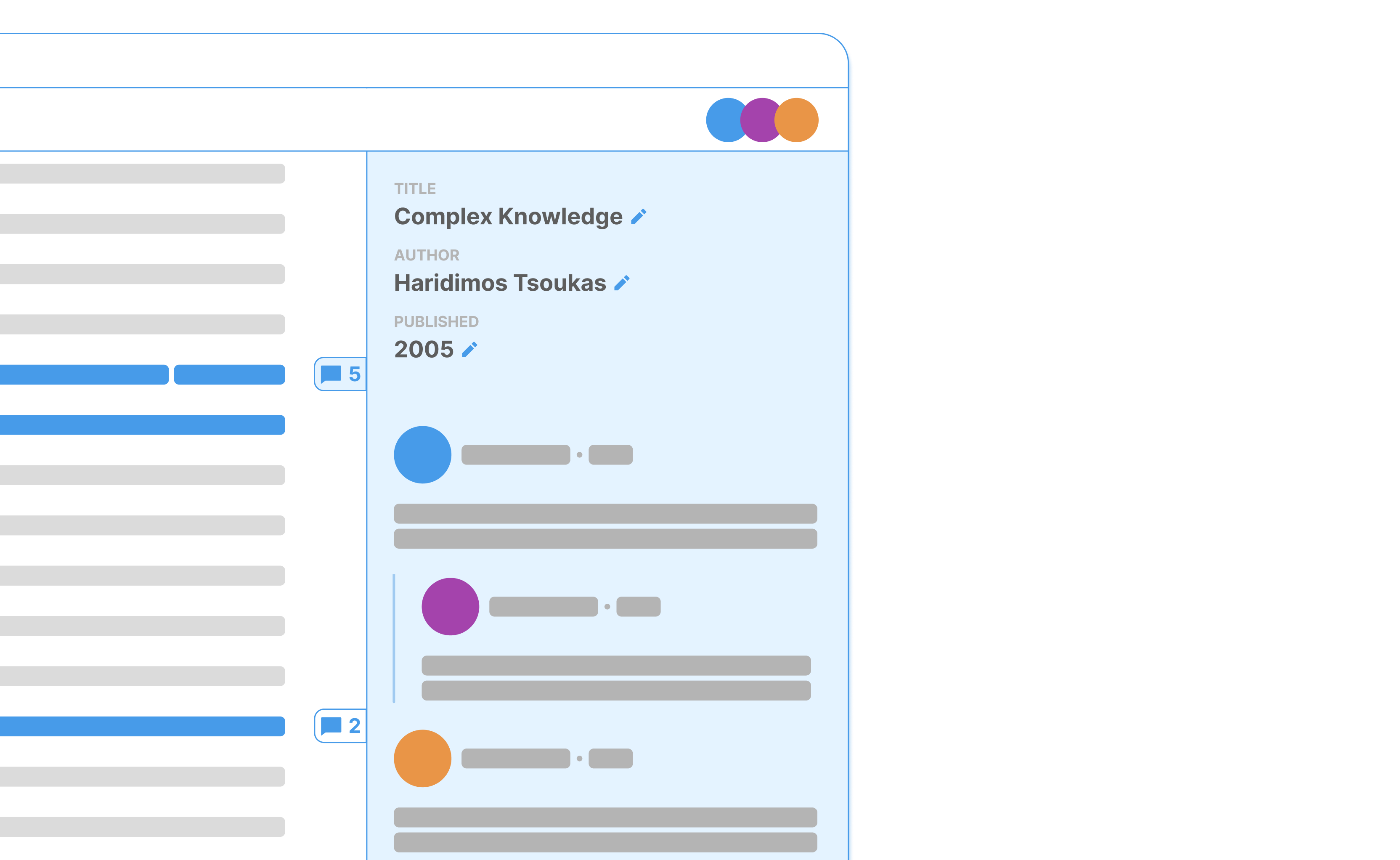

Metadata fields must be highly visible in any instance, or document. AI should make suggestions for various metadata fields, such as author, publication date, title. These can often be pulled directly from the head of a document and will require little oversight.

However, other fields, such as summary, or description will require input from the community. Questions could also be generated that directly help improve the training set posed such as: why is this media being shared with the community? Why is it or not a good fit for the channel?

All of this requires work from community members. And making a leap to a novel form factor would require commitment. It's clear that communities would need a reason to make the leap. Their incentives must be aligned with the outcomes.

Folklore offers a glimpse of what this alignment might look like. This group has begun pioneering the creation of a community mind through conversation selective curation of media. In Intuitive Feedback Loops I explore novel approaches to modifying the parameters of a community AI and explore possible ways its services could be licensed to create a revenue stream for the community.

Groups annotate documents effectively when they're interested in the content. They highlight key sections, have discussions about areas they're interested in. By situating group conversation in this context, and by prioritizing metadata collection the incentives can be aligned for both improved sense-making, and the curation of community trained and operated AIs. Online communities have the potential to draw from their collective wisdom by training AI agents while simultaneously improving the quality of conversation and the capacity of the group to recall sense-making over time. We're on the cusp—all that's missing are tools that optimizing existing behaviors and impulses.

Read More

Read More

Get Updates

© 2025